Consilient Data in the Next Phase of Data

It was after talking with Mark Madsen at Strata that the idea of Consilience really took hold. (At that point, I didn’t know the word, just the idea). There were various iterations of the idea using words such as ‘interoperable’ and ‘compose-able’ but there was nothing satisfying enough to make the idea stick.

It finally all came together one evening talking to Nick Harkaway on Twitter to quote “@CodeBeard (Interesting word here might be “consilient”)”.

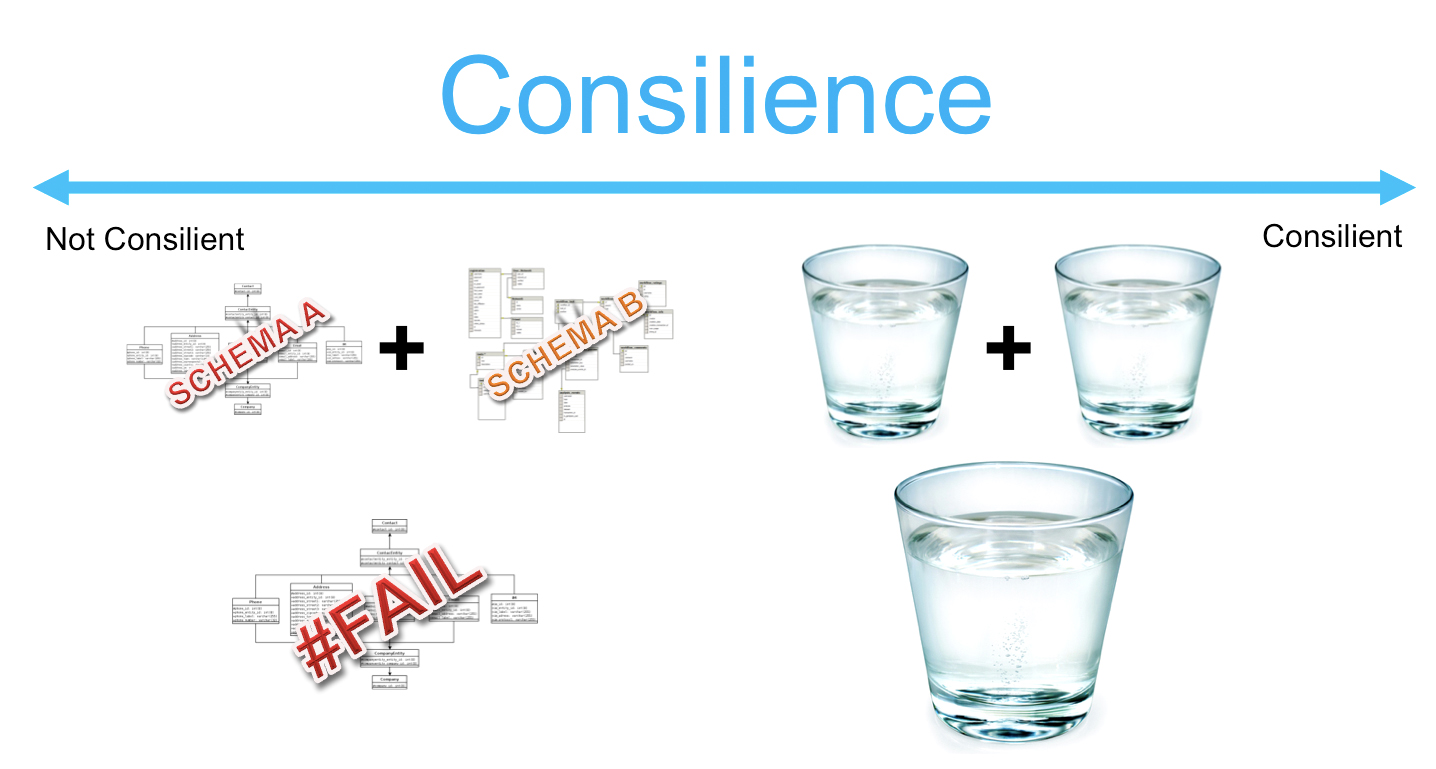

The word Consilient is usefully defined as ‘the principle that evidence from independent, unrelated sources can “converge” to strong conclusions’. It was the title of a book by E.O. Wilson in discussion of the unification of science.

Consilience literally means ‘jumping together’.

IRT Data?

At DataShaka we are focused on solving the Variety problem within data. Our TCSV methodology results in one, single, unified set of data. TCSV can be combined together regardless of source or source format. TCSV from multiple sources, when combined together, create a valid unified set.

TCSV is consilient.

It turns out that consilience in data is unusual. This is because of the content specificity built in to most data formats, storage and methodologies. Only data content that fits within the known ‘Schema’ is valid. Data from outside of the schema is invalid.

Two sets of schema bound data are therefore not consilient.

Content Specificity.

When a schema is built around data content and that schema is used to define a data structure, the data structure is known as ‘content specific’. Content specific data structures are everywhere within software development and data systems. Relational Databases, and spreadsheets, are built from tables where the column headings and table relationships define the data structure. A JSON document is content specific when the keys (of a key value pair) contain data content. Similarly, XML is content specific when the node and attribute names contain data content.

Content specificity precludes consilience.

Content Agnosticism.

Content agnosticism is the philosophical opposite of content specificity. A content agnostic data structure pushes data content to the value level and uses ‘something else’ to define the structure of the data.

In the case of the TCSV ontology, it is Time, Content, Signal and Value that is the ‘something else’ used to define the structure of the data.

Tables, JSON and XML can all be used in a content agnostic way. When you adopt a content agnostic approach (apart from having to fight with the content specificity built in to a large amount of available software) the challenge of variety becomes much easier to tackle.

Content agnosticism promotes consilience.

Why is consilience in data important?

Data is not a new phenomenon, and Big Data as a ‘naming of parts’ has done great things for promoting the data agenda within organisations. Data as a route to learning promotes organisational learning, which is understood by many to be an organisational imperative.

While solving for the sheer amount (Volume) or speed (Velocity) of data, Big Data has left by the wayside the problem of Variety. Variety is thrown up from both the acquisition end of a data system and also the business questions end.

At the acquisition end of a data system, the struggle of change management is the most well understood form of the Variety challenge within Big Data. Consilience helps here because, with a truly consilient data ecosystem, data acquisition is easy. Data from multiple sources can be unified as a mater of course. Because of this, a data system becomes increasingly flexible and helpful to a user.

Consilience makes many of the traditional ETL process redundant.

At the other end, when a user queries a data system built around consilience they are not frustrated by the ‘edges’ of the system that limit what they can find out. The user is only limited by the range of data collected, and with a consilient data ecosystem, requesting new data does not represent a burden on the data system.

Consilient data that becomes a unified Chaordic set is able to fulfill a user’s data need on the users terms, not on some historically designed terms of the data system.

In Conclusion

In this modern (Big Data), connected (Smartphones), advertising ridden (Facebook, Twitter, Google) world, where the Internet of Things means devices from your watch and fridge to your thermostat and house plants spit out data, the challenge of bringing data together to a valuable end will become increasingly difficult unless this data is consilient.

Data held in TCSV (our Content Agnostic Master Ontology) is fully concilient. Regardless of source, two sets of TCSV work together as one unified set.

Consilient data is one part of the 3Cs which make up the Next Phase of Data. Read more about the Next Phase of Data here.

If you would like to know how we can help make your data ‘jump together’, get in touch! I will also be speaking on stage at the Big Data Week on Tuesday 7th May, so come and grab me afterwards.

Phil